The AI Playbook (Part 6): The Solution (Part 1) — The 'Govern' Pillar

Table of Contents

- Introduction: Building the Foundation

- What is an AI "System of Record"?

- How the "Govern" Module Solves the Legal-Tech Divide

- Conclusion

Introduction: Building the Foundation

We have established that manual, spreadsheet-based governance fails (see Part 5). It is too slow, too static, and too error-prone to manage the dynamic risk of AI.

The solution is a platform approach. And that solution must start with a solid foundation.

This is the "Govern" pillar.

This pillar is not just a feature; it is the central "system of record" for all AI in your enterprise. It is the "command center" and "single pane of glass" that your CCO, GC, and CISO have been missing. It is the direct, operational answer to the NIST RMF's "Govern" function (from Part 3), which we identified as the foundational pillar for all other compliance activities.

What is an AI "System of Record"?

You cannot comply with a policy you cannot enforce. And you cannot enforce a policy on models you do not know you have. The "Govern" pillar solves this by creating a "structured and well-classified inventory" that connects your policies to your actual AI assets. It is composed of three essential parts.

1. The Live, Automated Model Inventory

This is not a static spreadsheet. It is a "central database" and a "unified asset inventory". A platform's "Govern" module provides this "AI Registry". Critically, it automates discovery by "seamless[ly] model and agent registration". It uses API integrations to "automatically capture and register models... as they are created" in your MLOps tools (like Azure ML or Vertex AI).

2. Automated Risk Assessment & Classification

Once a model is in the inventory, you can govern it. This is where you move from "unknown risk" to "managed risk." The platform allows you to "label" and "classify" every model by "Risk Level," "Use Case Type," and "Regulatory Sensitivity". This is the exact action required by the EU AI Act. You can now flag a model as "High-Risk," which automatically triggers deeper governance workflows.

3. Policy-as-Code & Framework Mapping

This is the "Rosetta Stone" we discussed in Part 4. The "Govern" module acts as your "centralized policy enforcement" hub. It is where you define your internal rules (e.g., "No model can be deployed without a fairness scan"). The platform then automates the hard work of "regulatory alignment". It maps your one internal policy to the dozens of external controls from the EU AI Act, NIST RMF, ISO 42001, and others.

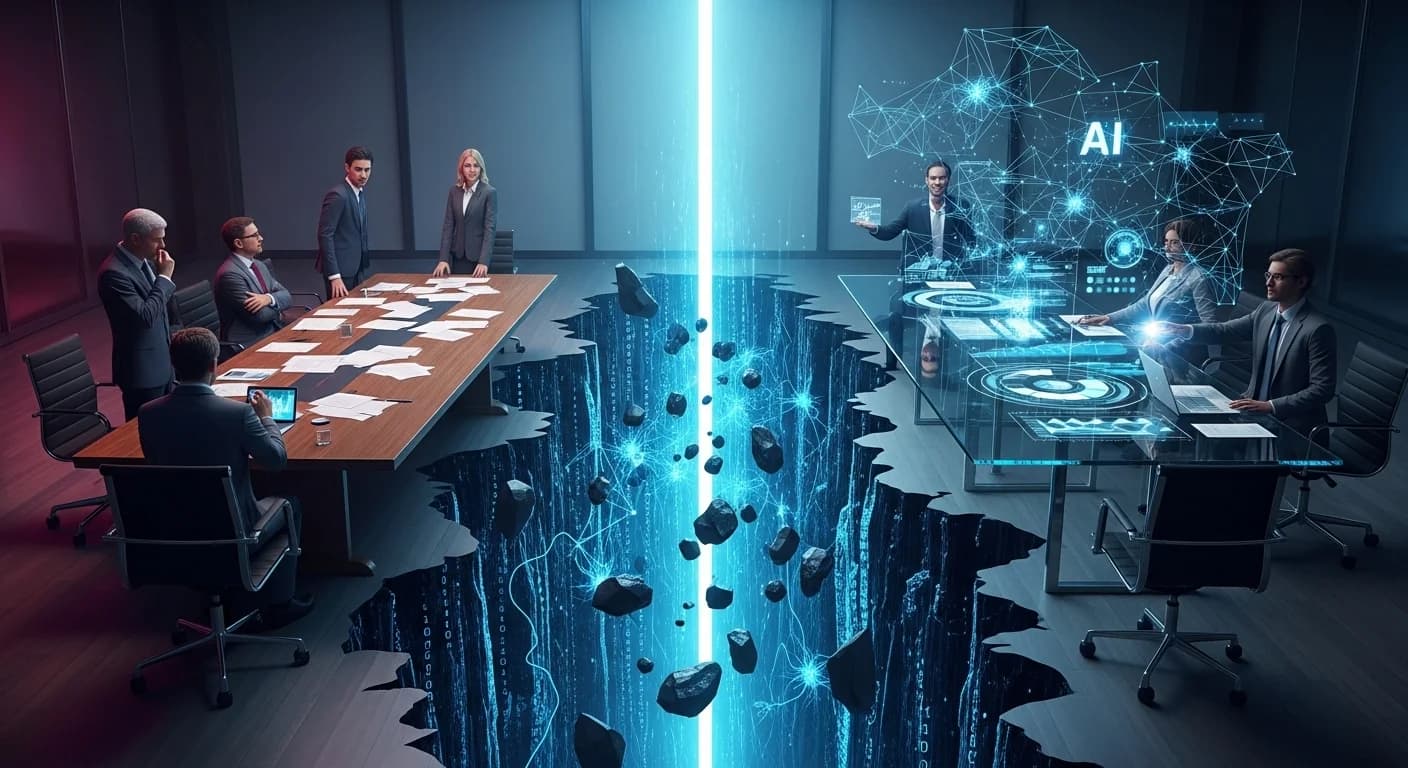

How the "Govern" Module Solves the Legal-Tech Divide

The EU AI Act and NIST RMF have, for the first time, joined your General Counsel and your Head of MLOps at the hip. The problem is, they do not speak the same language. Your GC understands "Risk Assessments". Your MLOps team understands "API integration" and "ML lifecycles".

A "Govern" module is the translation layer between them. When your GC or CCO uses the platform's user-friendly interface to flag a model as "High-Risk" (a legal/business action), it automatically enforces a "policy-aware risk assessment" in the technical MLOps pipeline. This action can automatically block a model's deployment until a human-in-the-loop review is complete.

This "single source of truth" is the only way to operationalize the NIST "Govern" pillar and make compliance a scalable, automated reality.

Conclusion

A "Govern" platform gives you a single pane of glass to see all your AI and its associated risks. For the first time, you have a complete, real-time, and actionable inventory.

But seeing isn't enough. You have to measure. You must monitor those models 24/7, especially the ones you've labeled "High-Risk."

Next in Part 7: The "Monitor" Pillar, we'll cover your automated watchdog for live AI.