The AI Playbook (Part 5): Why Your 'Compliance-by-Spreadsheet' Will Fail

Table of Contents

- Introduction: The "Snapshot-in-Time" Compliance Model

- The Three Reasons AI Breaks This Model

- The Inherent Failures of the Spreadsheet

- The "False Sense of Security"

- Conclusion: From Manual Governance to Automated Governance

Introduction: The "Snapshot-in-Time" Compliance Model

In the old world of governance—a world that ended about 18 months ago—compliance was a "snapshot-in-time." You did an audit, you filled out a checklist in Excel, an auditor signed off, and you were "compliant" for the year. Your GRC (Governance, Risk, and Compliance) tools were built for this static world.

That world is gone. That model is dead.

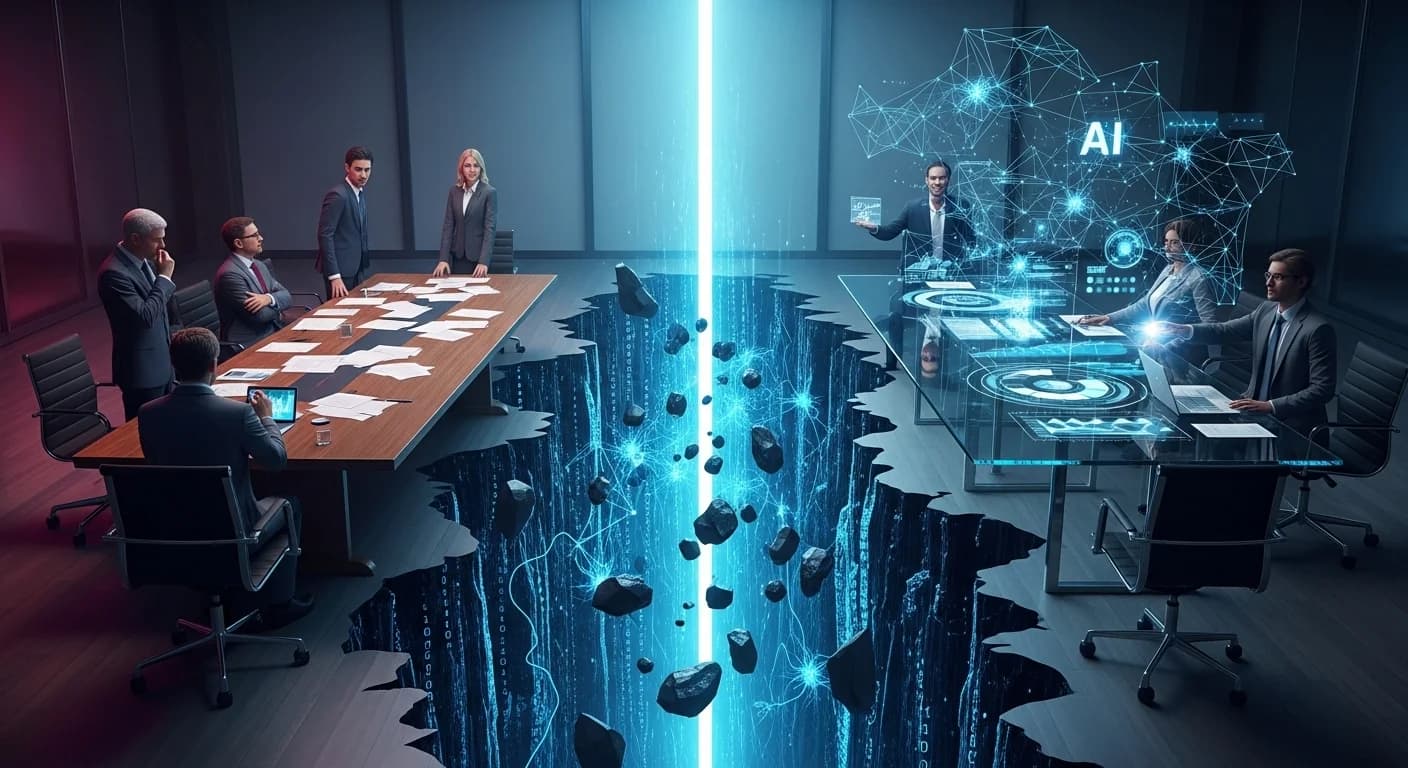

When applied to the dynamic, high-speed, and opaque world of AI, your "compliance-by-spreadsheet" is not just inefficient. It is a categorical failure that creates a dangerous "false sense of security".

The Three Reasons AI Breaks This Model

Your spreadsheets and manual checklists are fundamentally mismatched with the asset they are trying to govern.

1. Speed & Scale

Your teams are retraining and deploying models weekly, daily, or even hourly—not annually. You don't have 3 models; you have 3,000. A spreadsheet cannot "scale" to this reality. It is a "labor-intensive process" in a world that demands automation.

2. The Dynamic & Drifting Nature

This is the "Shadow Risk" that should terrify a CRO. An AI model is not a static asset like a server. It is a dynamic asset that interacts with live, unpredictable data. And because of that, it drifts. "AI models degrade. It's not a bug, it's inevitable". "A fair model today can become biased tomorrow". "Failure to monitor drift leads to performance decay" and massive "regulatory exposure".

3. The "Black Box" Problem

You are now legally required to explain why your model made a decision. But for many models, your team cannot explain the opaque logic. This "black box" nature, combined with legal doctrines of intent and causation, creates a massive, unmanaged liability.

The Inherent Failures of the Spreadsheet

Now, let's look at the tool itself. Your "compliance-by-spreadsheet" is a house of cards, and the first gust of wind—a regulatory audit—will blow it over.

They are Static

Spreadsheets are "point in time". They are "always outdated" the moment you close the file. A static, outdated document cannot govern a dynamic, drifting asset.

They are Error-Prone

Research suggests that 88% of business spreadsheets contain errors. They are built on "manual data entry, formula mistakes, and version control problems".

They are Siloed & Rigid

Spreadsheets are "silo-inducing" and "too rigid". They cannot create a "single source of truth." Your legal team's compliance spreadsheet has no connection to your MLOps team's deployment logs. They are separate, untracked, and ungoverned.

They Have No Audit Trail

This is a critical failure. "The audit trail goes up in smoke". When a regulator asks you to prove what your model's bias score was six months ago, your spreadsheet is useless. You have no "record-keeping", which is a direct violation of the EU AI Act.

The "False Sense of Security"

This is the central danger. Spreadsheets are not just inefficient; they are actively harmful.

A spreadsheet that is "always outdated" for a dynamic, drifting asset is not just a "poor tool." It is a dangerous lie.

It provides a "false sense of security". It creates a "policy placebo". Your CRO, your CEO, and your board of directors think you are compliant because a box is checked in an Excel file. But in reality, your live, production-grade model has been silently drifting into discriminatory, non-compliant behavior for months.

The "shadow risk" is accumulating in the gaps between your manual, "snapshot-in-time" audits.

Conclusion: From Manual Governance to Automated Governance

A spreadsheet cannot monitor a live model for bias 24/7. A Word document cannot trace a model's lineage from training data to production. You must move from manual, static governance to automated, dynamic, operationalized governance.

The solution starts with building a foundation. You must have a single source of truth. Next in Part 6: The "Govern" Pillar, we introduce your central, automated system of record for all AI.