Beyond Structure: Why Boltz-2 and the 'Interaction Era' Matter for Drug Discovery

Table of Contents

- Introduction: The Post-AlphaFold Reality

- The Core Shift: Unified Tokenization and Diffusion

- Boltz-2 Under the Hood: The Affinity Head Innovation

- The Efficiency Frontier

- Architectural Divergence: Boltz-2 vs. The Field

- The Killer App: Generative Inverse Design

- Deployment: Running Boltz-2 at Scale

- Final Thoughts: The New Standard

Introduction: The Post-AlphaFold Reality

For the last three years, the field of structural biology has been living in the "Post-AlphaFold" reality. We solved the static folding problem for monomers, but for those of us in drug discovery, a perfectly folded protein is just the starting line. The real challenge—and the real value—lies in binding: predicting how that protein interacts with ligands, nucleic acids, and other proteins in a dynamic environment.

This year, the release of Boltz-2 by the MIT Jameel Clinic and Recursion has signaled a shift from structure prediction to interaction modeling. This is not just an incremental update; it is an architectural fork designed explicitly to bridge the "Affinity Gap" that has plagued deep learning models to date.

In this post, we take a technical deep dive into Boltz-2, comparing it with AlphaFold 3 (AF3) and Chai-1, and analyzing why "all-atom co-folding" is the new standard for lead identification.

The Core Shift: Unified Tokenization and Diffusion

To understand why the current generation of models outperforms classical docking, you have to look at the tokenization.

In the old stack (e.g., AlphaFold 2 + AutoDock Vina), the protein and the ligand were treated as separate entities. The protein was a sequence of residues; the ligand was a rigid graph. The "docking" was a post-hoc optimization problem, often trying to jam a flexible ligand into a rigid crystal structure.

Boltz-2 and AF3 change the primitive. They utilize a unified tokenization strategy where biological and chemical matter are processed in the same heterogeneous graph:

- Proteins: Tokenized at the residue level (with atom-level decoding)

- Ligands/DNA/RNA: Tokenized at the atomic level

This allows the model's attention mechanism to attend to a ligand atom with the same fidelity as a protein residue. The result is a true "induced fit" prediction: the protein side-chains and backbone adjust in real-time to the steric and electrostatic presence of the ligand during the generation process.

From Single-Pass to Diffusion

Instead of predicting rotation/translation matrices in a single pass (like AF2), these models use diffusion. They start with a noise distribution and iteratively denoise the coordinates of the entire complex simultaneously. This captures the joint probability distribution of the protein-ligand state, rather than just the lowest-energy state of the protein alone.

The diffusion paradigm enables several critical capabilities:

- Uncertainty quantification through multiple sampling passes

- Ensemble generation of plausible binding poses

- Joint optimization of protein conformation and ligand placement

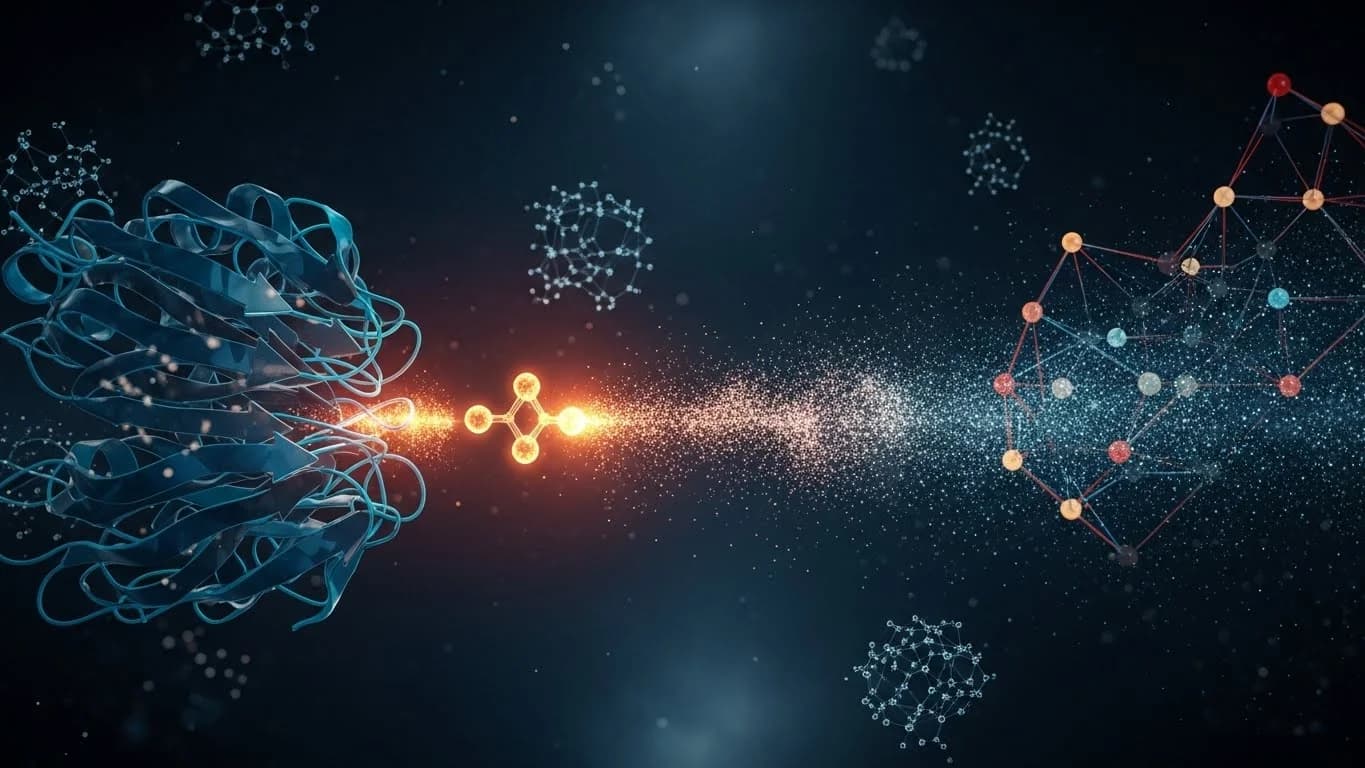

Boltz-2 Under the Hood: The Affinity Head Innovation

While AF3 defined the architecture, Boltz-2 refined it for pharma. The most critical differentiation is its explicit focus on binding affinity.

AlphaFold 3 predicts structure. It does not natively tell you if a ligand is a nanomolar binder or a micromolar binder—it just gives you a confident pose. Boltz-2 introduces a Dual-Head Affinity Module that branches off the main PairFormer trunk:

| Head Type | Output | Optimized For |

|---|---|---|

| Binary Classification | Logistic score (0-1) predicting probability of binding | Hit Discovery (triage) |

| Continuous Regression | Prediction of pKd or pIC50 | Lead Optimization (ranking) |

This module was trained on approximately 750,000 high-quality protein-ligand pairs from ChEMBL and BindingDB. The architectural significance here is that the affinity prediction is conditioned on the generated structure. If the model hallucinates a bad pose, the affinity head (ideally) recognizes the poor contacts and penalizes the score.

The Structure-Affinity Coupling

The key insight is that Boltz-2 does not treat structure prediction and affinity prediction as separate problems. The affinity head receives embeddings from the same transformer trunk that generates the structure, creating a feedback loop where:

- Poor predicted contacts → Low affinity score

- Low affinity score → Signal to refine structure

- Refined structure → Better contact prediction

This coupling is what enables Boltz-2 to approach physics-based accuracy without the computational cost.

The Efficiency Frontier

The claim that has everyone talking is that Boltz-2 approaches Free Energy Perturbation (FEP) accuracy (R ≈ 0.66 vs R ≈ 0.7–0.8 for FEP) while being 1,000x faster.

| Method | Correlation (R) | Time per Complex | Use Case |

|---|---|---|---|

| Classical Docking | ~0.3–0.4 | Seconds | Initial screening |

| Boltz-2 | ~0.66 | ~20 seconds (H100) | High-throughput screening |

| FEP/MD | ~0.7–0.8 | Hours to days | Final validation |

While FEP remains the gold standard for final validation, Boltz-2 effectively democratizes "good enough" affinity prediction for high-throughput screening, running at approximately 20 seconds per complex on an H100 GPU.

The Economics of Screening

Consider a typical virtual screening campaign:

- Library size: 1 million compounds

- Classical docking: ~1 week on a cluster

- Boltz-2 screening: ~230 GPU-hours (achievable in hours with parallelization)

- FEP on 1M compounds: Computationally infeasible

Boltz-2 occupies a critical middle ground: fast enough for library-scale screening, accurate enough to dramatically reduce false positives before wet-lab validation.

Architectural Divergence: Boltz-2 vs. The Field

The landscape is becoming crowded. Here is how the top contenders stack up architecturally:

| Feature | Boltz-2 (Open Source) | AlphaFold 3 (DeepMind) | Chai-1 (Chai Discovery) |

|---|---|---|---|

| Backbone | 64-layer PairFormer | 48-block PairFormer | PairFormer + pLM Embeddings |

| Tokenization | Unified (Atoms + Residues) | Unified (Atoms + Residues) | Unified |

| Inference | Diffusion | Diffusion | Diffusion |

| Affinity | Explicit Dual-Head | Implicit (pLDDT/PAE) | Implicit |

| Specialty | Method Conditioning (NMR/MD) | Ions/Metals | Single-Sequence Mode |

| License | MIT (Open Weights/Code) | Closed / Restricted | Apache 2.0 (Open) |

Key Takeaways

AlphaFold 3 is still superior for metal ion coordination and complex PTMs due to its massive, diverse training set. When your target involves zinc fingers, iron-sulfur clusters, or heavily glycosylated proteins, AF3 remains the gold standard.

Chai-1 is the go-to for orphan proteins (single-sequence mode), where MSAs are not available. For novel protein families with few homologs in sequence databases, Chai-1's protein language model embeddings provide critical context.

Boltz-2 wins on integration. Its open license and affinity head make it the only viable "drop-in" replacement for a proprietary docking pipeline. You can deploy it on-prem, fine-tune it on your internal data, and build production workflows around it without licensing concerns.

The Killer App: Generative Inverse Design

The most exciting application of Boltz-2 is not just screening—it is generation.

Because the entire pipeline is differentiable, we can invert the process. BoltzGen is a wrapper around the architecture that allows for "hallucinating" binders. Instead of inputting a ligand and asking "does it bind?", you input a pocket and a target affinity, and the model diffuses a molecular structure (or peptide sequence) that fits the latent representation of a high-affinity binder.

This closes the loop between Virtual Screening and De Novo Design:

Traditional Pipeline:

[Library] → [Screen] → [Hits] → [Optimize] → [Lead]

Generative Pipeline:

[Target Pocket] + [Desired Properties] → [BoltzGen] → [Novel Binders]

Early Results

In early benchmarks, this approach generated nanomolar binders for 66% of novel targets tested—a hit rate that is orders of magnitude higher than random library screening, which typically yields hit rates of 0.01–0.1%.

The generative approach also enables:

- Scaffold hopping: Generating chemically distinct molecules with similar binding profiles

- Property optimization: Conditioning generation on ADMET properties simultaneously

- Novelty exploration: Pushing into unexplored chemical space beyond existing libraries

Deployment: Running Boltz-2 at Scale

For technical teams looking to deploy this, the "Open Source" tag is the critical enabler. Unlike AF3, which is gated behind a web server for commercial use, Boltz-2 can be containerized and run on-prem.

Infrastructure Options

NVIDIA BioNeMo: Boltz-2 is integrated as a NIM (NVIDIA Inference Microservice), optimized with cuEquivariance kernels to handle the massive compute of the 64-layer trunk.

Self-Hosted Deployment: The MIT license allows full deployment flexibility:

# Example deployment considerations

- Container: Docker/Singularity with CUDA 12.x

- Memory: 64GB+ GPU memory recommended

- Storage: Model weights ~15GB

- Networking: Consider batching for throughput

Hardware Requirements

Boltz-2 is hungry. You are looking at H100s or A100s to get that ~20s inference time. Attempting to run this on consumer hardware is theoretically possible but impractical for library-scale work.

| Hardware | Inference Time | Practical Use |

|---|---|---|

| H100 (80GB) | ~20 seconds | Production screening |

| A100 (80GB) | ~35 seconds | Production screening |

| A100 (40GB) | ~60 seconds | Development/testing |

| RTX 4090 | ~120+ seconds | Prototyping only |

Scaling Considerations

For library-scale screening (millions of compounds), consider:

- Batching: Group similar-sized ligands to maximize GPU utilization

- Precomputation: Cache protein embeddings for repeated screens against the same target

- Hierarchical filtering: Use faster methods (fingerprint similarity, 2D pharmacophore) for initial triage before Boltz-2

Final Thoughts: The New Standard

Boltz-2 is not a magic bullet. It still struggles with:

- Molecular glues: Ternary complex formation remains challenging

- Massive conformational changes: Induced fit beyond side-chain rearrangement

- Allosteric effects: Binding events far from the active site

- Covalent binders: Irreversible inhibitors require special handling

It is not a complete replacement for rigorous physics-based FEP when you need exact energy calculations (±1 kcal/mol).

The Strategic Value

However, as a filter, it is revolutionary. By moving the "Affinity Gap" upstream—filtering out non-binders with high-fidelity structure-based inference before they ever reach the FEP or wet-lab stage—it fundamentally changes the economics of the funnel.

Consider the traditional drug discovery funnel:

| Stage | Compounds | Cost per Compound | Total Cost |

|---|---|---|---|

| Virtual Screen | 1,000,000 | $0.01 | $10,000 |

| Docking Hits | 10,000 | $1 | $10,000 |

| Biochemical Assay | 1,000 | $100 | $100,000 |

| Cell-Based Assay | 100 | $1,000 | $100,000 |

If Boltz-2 can reduce the docking-to-biochemical false positive rate by 50%, the downstream savings are substantial—not just in dollars, but in time-to-candidate.

The Bottom Line

For the technical lead in 2025, the question is not "Should we use AI for folding?" It is "How fast can we integrate Boltz-2 into our screening loop?"

The shift from structure prediction to interaction modeling is not incremental—it is a paradigm change. The tools that bridge the affinity gap will define the next generation of computational drug discovery platforms. Boltz-2, with its open license, explicit affinity prediction, and generative capabilities, is currently the most accessible entry point into this new era.

The "Interaction Era" has begun.

Note: This analysis reflects the state of these tools as of late 2025. The field is evolving rapidly, and capabilities continue to improve with each model release.

#drugDiscovery #computationalBiology #AI #machineLearning #proteinStructure #Boltz2 #AlphaFold