Beyond the Uncanny Valley: Engineering Identity 2.0 in the Age of Agentic AI

Table of Contents

- Introduction: The Collapse of Traditional Trust Heuristics

- 1. Vishing at Scale: The Industrialization of Vocal Deception

- 2. The Death of the Video Call: Challenge-Response and Liveness

- 3. Passkeys vs. Biometrics: The Convergence on Liveness Detection

- 4. The Identity 2.0 Framework: NIST and the EU AI Act

- Architectural Summary: The 2026 Resilience Quotient

- Conclusion: Anchoring Trust in Physical Reality

Introduction: The Collapse of Traditional Trust Heuristics

The technological epoch of 2026 has brought about a fundamental collapse of traditional trust heuristics. For decades, we secured the "perimeter" with firewalls and VPNs, but as real-time generative AI achieves fidelity indistinguishable from biological reality, the perimeter has moved.

In 2026, Identity is the new perimeter, and the tools we once used to verify it—voice cadence, visual recognition, and knowledge-based secrets—have transitioned from security assets into systemic vulnerabilities.

This post examines the technical landscape of identity verification in an era where synthetic media is indistinguishable from reality, and provides actionable frameworks for engineering resilient authentication systems.

1. Vishing at Scale: The Industrialization of Vocal Deception

In 2026, the "Mother's Maiden Name" is officially dead.

The collapse of Knowledge-Based Authentication (KBA) was accelerated by the industrialization of "Agentic AI"—autonomous systems that scrape LinkedIn and corporate metadata to craft personalized pretexts before launching surgical attacks.

The Voice Cloning Threshold

Today's attackers require minimal source material to weaponize voice:

| Audio Duration | Clone Fidelity | Detection Difficulty |

|---|---|---|

| 3 seconds | 85% voice match | Detectable by trained ear |

| 30 seconds | Indistinguishable | Human detection impossible |

| 2+ minutes | Full emotional range | Adaptive conversational capability |

These are not just recordings; they are real-time, AI-powered conversational bots that adapt to victim responses and execute full ransomware chains in under 25 minutes.

The Technical Bypass: OTP Harvesting

One-time password (OTP) bots now combine voice cloning with automated call triggering. The attack chain operates as follows:

- Trigger: Attacker initiates login, triggering MFA challenge

- Clone Activation: Bot calls victim using cloned "IT Support" voice

- Social Engineering: "Hi, this is IT Security. We detected suspicious activity on your account. Can you read me the code you just received?"

- Harvest: Victim reads OTP; attacker completes authentication

- Persistence: Full account compromise in under 60 seconds

The victim never interacted with an attacker—they interacted with what their brain registered as a trusted colleague.

The Mitigation: Phishing-Resistant Authentication

The only effective countermeasure is removing human judgment from the authentication loop entirely:

- FIDO2 Hardware Keys: Hardware-backed cryptographic handshakes that cannot be phished, cloned, or socially engineered

- Passkey Enrollment: Binding authentication to specific devices with TPM-backed key storage

- Zero Phone-Based OTP: Eliminating SMS and voice OTP from all high-value workflows

2. The Death of the Video Call: Challenge-Response and Liveness

The 2024 deepfake CFO fraud, which cost a firm $25 million, was merely a proof-of-concept for the injection attacks we face in 2026.

Attackers have moved beyond "Presentation Attacks" (holding a photo to a camera) to "Injection Attacks," where synthetic video streams are fed directly into the OS data pipeline, bypassing the physical sensor entirely.

Attack Vector Evolution

| Generation | Attack Type | Method | Countermeasure |

|---|---|---|---|

| Gen 1 | Presentation | Photo/video held to camera | Basic liveness |

| Gen 2 | Replay | Pre-recorded deepfake | Active challenges |

| Gen 3 | Injection | Synthetic stream to OS | Hardware attestation |

Challenge-Response Protocols

To secure internal meetings, organizations have moved to Challenge-Response protocols designed to break current real-time synthesis models:

Physical Challenges

Asking a participant to turn their head fully to the side or wave a hand in front of their face. Most real-time models are trained on frontal data and struggle with lateral occlusion, leading to "ghosting" or facial mesh distortion.

Effective challenges include:

- Full 90-degree head rotation

- Hand passing between face and camera

- Holding a random physical object to the camera

- Writing a random word on paper and displaying it

Temporal Analysis

Detection systems now monitor for the 100-300ms drift between lip movements and audio—a latency artifact inherent in real-time AI synthesis. This "lip-sync lag" is a fundamental limitation of current architectures that require:

- Audio capture and processing

- Viseme (mouth shape) generation

- Facial mesh rendering

- Frame composition and streaming

Each step introduces latency that accumulates beyond human perception thresholds but remains detectable by automated systems.

Out-of-Band (OOB) Verification

For high-stakes decisions (financial transfers, access grants, personnel actions), the protocol is clear: end the video session and perform a callback to a pre-validated number stored in a hardware-protected directory.

This breaks the attack chain by forcing verification through a channel the attacker cannot control in real-time.

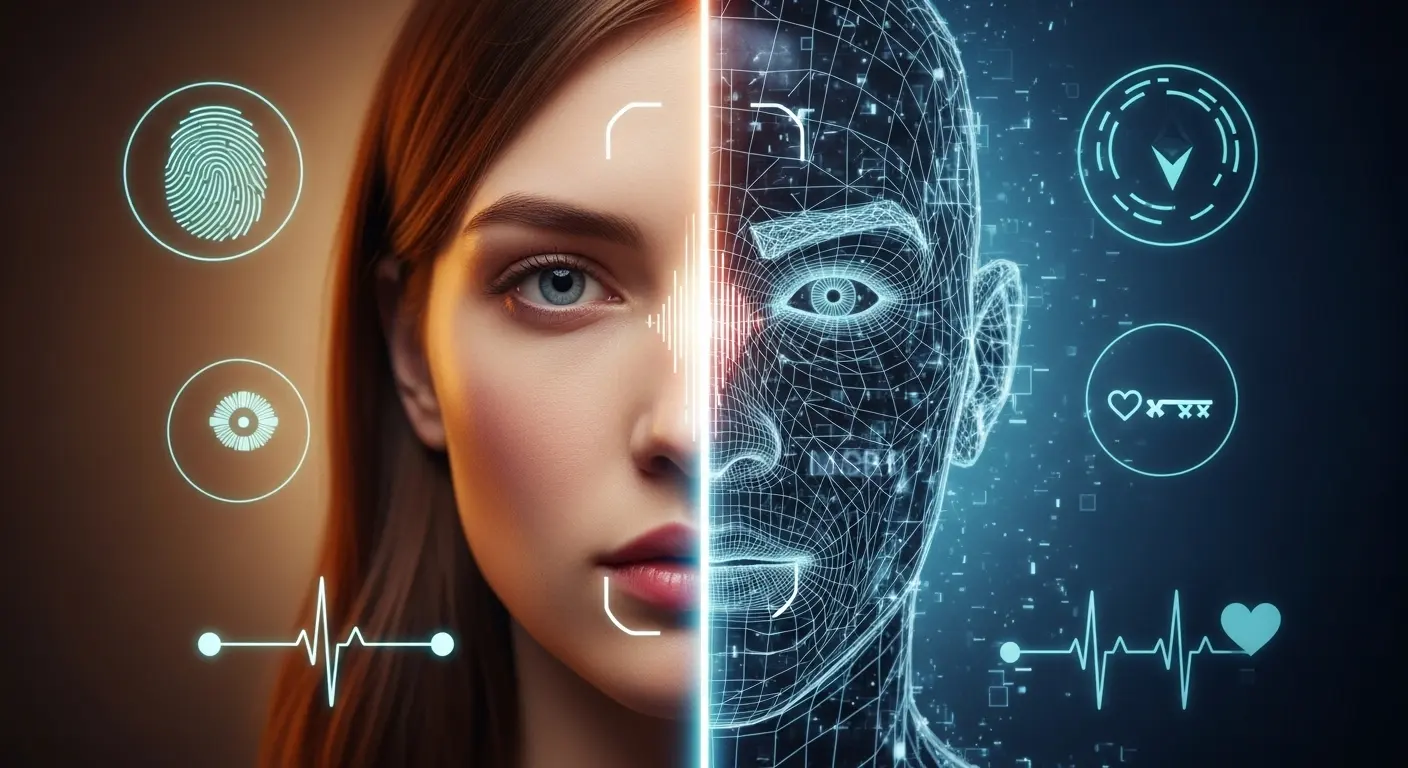

3. Passkeys vs. Biometrics: The Convergence on Liveness Detection

While Passkeys (FIDO2/WebAuthn) have effectively ended remote phishing by binding keys to devices, they cannot prove the presence of a live human. The key proves the device is present—not who is holding it.

In 2026, the industry has pivoted to "Liveness Detection" as a mandatory secondary factor for high-risk transactions.

Passive Liveness: The Current Gold Standard

Unlike active liveness (asking a user to blink or smile), passive liveness runs in the background without user interaction. This approach analyzes:

Remote Photoplethysmography (rPPG)

By detecting micro-changes in skin tone caused by blood flow, cameras can verify the presence of a living circulatory system. Synthetic faces, no matter how realistic, do not pulse.

Skin Texture Analysis

Deep learning models trained on dermatological datasets can identify the micro-texture patterns of human skin that current generative models fail to reproduce at the sub-pixel level.

Depth Information

Time-of-flight sensors and structured light (as found in Face ID) create 3D maps that synthetic 2D streams cannot replicate without specialized hardware.

Hardware-Level Attestation

New silicon architectures have fundamentally changed the trust model for video capture:

| Architecture | Capability | Security Guarantee |

|---|---|---|

| NVIDIA Rubin | Hardware watermarking | Cryptographic proof of origin |

| Intel Jaguar Shores | Sensor attestation | Physical lens verification |

| Apple A-Series | Secure Enclave capture | Tamper-evident frame signing |

These sensors embed a cryptographic "proof of origin" into every video frame at the moment of capture, ensuring the data stream originated from a physical lens, not a virtual camera driver.

The Attestation Chain

Physical Sensor → Secure Element → Signed Frame → Verified Stream

↓ ↓ ↓ ↓

Photons Private Key Timestamp Trust Anchor

If any link in this chain is broken—if the frame cannot prove it came from attested hardware—the identity verification fails.

4. The Identity 2.0 Framework: NIST and the EU AI Act

The regulatory landscape has caught up to the synthetic threat.

NIST SP 800-63-4 Requirements

NIST SP 800-63-4 now mandates that high-assurance environments implement separate protections for both:

- Presentation Attack Detection (PAD): Defending against physical artifacts (photos, masks, screens) presented to cameras

- Injection Attack Detection (IAD): Defending against synthetic streams injected into the software pipeline

This bifurcated approach recognizes that these are fundamentally different threat vectors requiring distinct technical controls.

EU AI Act Mandates

The EU AI Act (operational as of August 2026) mandates that any synthetic content be marked in a machine-readable format. For technical teams, this creates both obligations and opportunities:

Obligations:

- All AI-generated content must contain embedded provenance metadata

- Synthetic media used in communications must be disclosed

- Identity verification systems must be auditable for bias

Opportunities:

- Legitimate synthetic content becomes detectable by design

- Undisclosed synthetic media becomes prima facie evidence of malicious intent

- Regulatory alignment creates clear liability boundaries

Implementation Requirements

For technical teams, compliance requires:

Identity-First Zero Trust

Moving from location-based trust to cryptographic identity binding. Every request must be verified based on:

- Cryptographic proof of who the workload is

- Current security posture of the requesting entity

- Context of the request (time, location, behavior patterns)

- Hardware attestation of the capture device

SPIFFE/SPIRE Standards

Standardizing the issuance and federation of cryptographic identities for machine-to-machine communication, treating AI agents as first-class actors in the IAM lifecycle.

This means:

- Every AI agent receives a cryptographic identity (SVID)

- Identities are short-lived and continuously rotated

- Cross-system federation is standardized

- Audit trails capture the full identity chain

Architectural Summary: The 2026 Resilience Quotient

The modern security stack is no longer about "getting in"—it's about "logging in."

We measure our resilience ($RQ$) by the probability of detecting an injection across multiple layers ($n$), entropy of challenges ($H$), and hardware attestation ($A$):

$$RQ = 1 - \prod_{i=1}^{n} (1 - P(D_i | H, A))$$

Where:

- $n$ = number of independent detection layers

- $P(D_i | H, A)$ = probability of detection at layer $i$ given challenge entropy and attestation

- $H$ = entropy of challenge-response protocols

- $A$ = strength of hardware attestation

Practical Interpretation

| Layers ($n$) | Per-Layer Detection | Resilience Quotient |

|---|---|---|

| 1 | 90% | 0.90 |

| 2 | 90% | 0.99 |

| 3 | 90% | 0.999 |

| 4 | 90% | 0.9999 |

Each additional layer provides exponential improvement in attack detection probability—but only if layers are truly independent.

The Defense Stack

A complete Identity 2.0 implementation includes:

- Layer 1 - Cryptographic: FIDO2/Passkeys with hardware binding

- Layer 2 - Biometric: Passive liveness with rPPG analysis

- Layer 3 - Behavioral: Continuous authentication based on interaction patterns

- Layer 4 - Hardware: Sensor attestation with cryptographic proof of origin

- Layer 5 - Contextual: Risk-based challenges triggered by anomaly detection

Conclusion: Anchoring Trust in Physical Reality

In a world where seeing is no longer believing, we must anchor our trust in two immutable foundations:

- The physical reality of liveness: Biological signals that synthetic systems cannot yet replicate

- The mathematical certainty of cryptographic proof: Hardware-backed attestation that cannot be forged

The uncanny valley has been crossed. The visual and auditory heuristics that served humanity for millennia are now exploitable attack surfaces. But this is not cause for despair—it is cause for architectural evolution.

Identity is the perimeter. Secure it accordingly.

Action Items for Security Teams

- Audit all voice-based authentication and plan deprecation

- Implement FIDO2 hardware keys for privileged access

- Deploy injection attack detection for video conferencing

- Establish out-of-band verification protocols for high-value decisions

- Evaluate hardware attestation capabilities in endpoint fleet

- Train staff on challenge-response protocols for video calls

- Align identity architecture with NIST SP 800-63-4 requirements

The synthetic threat is real, but so are the defenses. The organizations that thrive in 2026 will be those that recognized identity as the new perimeter—and invested accordingly.